In this article we will review the basic concepts related to Grassmann Manifolds and how they are applied in subspace comparison. The original motivation for this post can be found in the field of clustering of multi-antenna wireless communication [1], which is the focus of my research in the context of the Windmill project.

Quick recap on subspaces

between two subspaces

between two subspaces  .

.Before going into further details regarding manifolds and how they can be used in subspace comparison, let us first recall some concepts of linear algebra. We start by reviewing the concept of vector spaces. In short, a vector space ![]() is normally defined by a set of vectors

is normally defined by a set of vectors ![]() together with a field

together with a field ![]() (a collection of scalars). These vectors may be added together or multiplied by the elements of this field to describe new vectors. Note that in this sense the zero vector has to be defined in this space. Moreover, the span of a set of vectors is defined as the set of all possible linear combinations of this set, and the dimension of a vector space is the number of vectors in its smallest spanning set.

(a collection of scalars). These vectors may be added together or multiplied by the elements of this field to describe new vectors. Note that in this sense the zero vector has to be defined in this space. Moreover, the span of a set of vectors is defined as the set of all possible linear combinations of this set, and the dimension of a vector space is the number of vectors in its smallest spanning set.

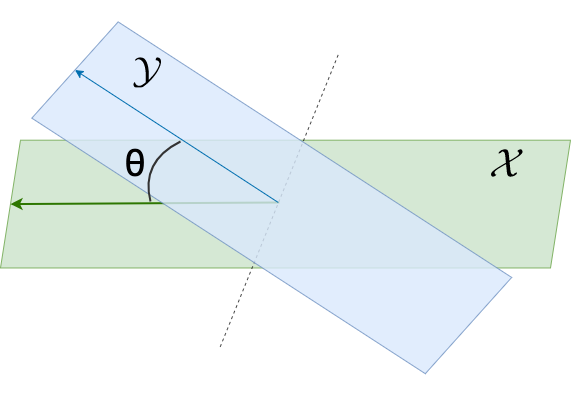

Similarly to how one would define angles between vectors, one can also define angles between spaces (or subspaces). In this case these angles are called principal angles.

Consider two subspaces

in the n-dimensional eucledian space

of dimensions

and

, respectively. The

principal angles

between these subspaces, and their corresponding principal vectors, are defined recursively by

subject to

The vectors

and

are the principal vectors. We will also define

as the collection of all principal angles between these vectors.

As we will discuss later, the principal angles induce several distance metrics on the Grassmann manifold. In practice, the principal angles between ![]() and

and ![]() can be computed from the singular value decomposition (SVD) of their orthonormal bases.

can be computed from the singular value decomposition (SVD) of their orthonormal bases.

Let the columns of

and

form the orthonormal bases for the subspaces

and

respectively. Then we have that the singular values of

correspond to the cosine of the principal angles

.

Grasmann Manifolds

. Extracted from [2].

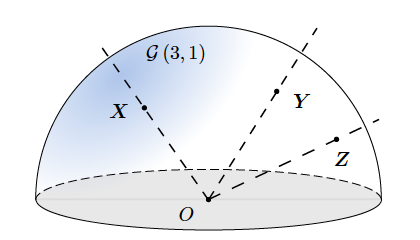

. Extracted from [2].The Grassmannian manifold ![]() refers to the

refers to the ![]() -dimensional space formed by all

-dimensional space formed by all ![]() -dimensional subspaces embedded into a

-dimensional subspaces embedded into a ![]() -dimensional real (or complex) Euclidean space. Let’s take the same example as in [2]. Think of embedding (mapping) lines that pass through the origin in

-dimensional real (or complex) Euclidean space. Let’s take the same example as in [2]. Think of embedding (mapping) lines that pass through the origin in ![]() into the 3-dimensional Euclidean space. Note that these lines are one-dimensional entities, i.e., can be described by a single value. Now think of a smooth geometry (semi-sphere) that intersects all these lines. The points laying on this semi-sphere are the points in the Grassmannian manifold

into the 3-dimensional Euclidean space. Note that these lines are one-dimensional entities, i.e., can be described by a single value. Now think of a smooth geometry (semi-sphere) that intersects all these lines. The points laying on this semi-sphere are the points in the Grassmannian manifold ![]() One often defines real Grassmannian manifolds as

One often defines real Grassmannian manifolds as

![]()

to represent the set of

Distance Measures

As mentioned above, the distance between subspaces can be geometrically characterized by their principal angles. And, of course, there is a relationship between the principal angles of two subspaces and their distance in the Grassmann manifolds. In fact, the principal angles induce several distance metrics on the Grassmann manifold ![]() , which in turn provides a topological structure to the set of all

, which in turn provides a topological structure to the set of all ![]() –dimensional subspaces in a

–dimensional subspaces in a ![]() –dimensional space [1,2].

–dimensional space [1,2].

For instance, let’s consider the squared projection-Frobenius distance

![Rendered by QuickLaTeX.com \[d^2_\text{PF}(\mathbf{X}_k,\mathbf{X}_j) = \sum_{i=1}^N \sin^2(\theta_{k,j}(i)) = \ N - \mathrm{tr}({\mathbf{X}}_k {\mathbf{X}}_j)\]](https://ksp-windmill-itn.eu/wp-content/ql-cache/quicklatex.com-911049a3c53b9fe89a3c32cded12e5f5_l3.png)

where ![]() denotes the trace of a matrix. This similarity represents how far two subspaces are based on the cosinus of their principal angles. Similarly, one can also relate the principal angles to the pseudo-determinant (product of non-zero eigenvalues) of the product

denotes the trace of a matrix. This similarity represents how far two subspaces are based on the cosinus of their principal angles. Similarly, one can also relate the principal angles to the pseudo-determinant (product of non-zero eigenvalues) of the product ![]() , in which case we end up with the squared Fubini-Study distance that can be described by

, in which case we end up with the squared Fubini-Study distance that can be described by

![Rendered by QuickLaTeX.com \[d^2_\text{FS}(\mathbf{H}_k,\mathbf{H}_j) = \prod_{i=1}^N \cos^2(\theta_{k,j}(i)) = \mathrm{pdet}({\mathbf{X}}_k {\mathbf{X}}_j)\]](https://ksp-windmill-itn.eu/wp-content/ql-cache/quicklatex.com-d89e5013995e4c2808683cb8afd2f9b3_l3.png)

, where ![]() denotes the pseudo-determinant. The main advantage of

denotes the pseudo-determinant. The main advantage of ![]() with respect to other metrics is the fact that it can be computed without the need for eigendecompositions.

with respect to other metrics is the fact that it can be computed without the need for eigendecompositions.

Note that these are not the only distances that one can define between points in the Grassmann manifold. There are several other measures, and the table below makes a summary of the four most used ones. For further details in these metrics we refer the reader to [3].

| Name | Based on Principal Angles | Based on Bases |

| Projection-Frobenius | ||

| Fubini-Study | ||

| Arclength | – | |

| Spectral Distance | – |

Comparing Manifolds of Different Dimensions

So far we have primarily focused on quantifying the alignment (distance) of two equidimensional subspaces. However, in real-world applications [1,2,8,9], it is very common to encounter scenarios where the comparison of subspaces with distinct dimensions is made necessary. With that in mind, distinct research groups have come up with proposals for computing a distance between subspaces of different dimensions — containment gap [4], symmetric direction [5], distance and Schubert varieties [6]. However, in such approaches, one often needs to perform an extra step (normally involving a Monte Carlo simulation) in order to account for statistical significance of the comparison. In order to overcome this and other difficulties, we have recently proposed [1] a purely statistical comparison mechanism based on the asymptotic behaviour of the projection-Frobenius distance. The table below summarizes this and other methods proposed in the literature.

| Measure between ( | Idea behind it | |

| Containment Gap | Normalising subspaces distance based on the smaller subspace | |

| Symmetric Direction | Frobenius norm based solution | |

| Schubert Varieties | Compare subpaces in the doubly infinite Grassmannian See [6] for details in depends on the metric of choice | |

| Asymptotic behavior | With first and second-order statistics of metric |

References

[1] R. Pereira, X. Mestre and D. Gregoratti, “Subspace Based Hierarchical Channel Clustering in Massive MIMO,” GLOBECOM 2021 – 2021 IEEE Global Communications Conference, 2021, pp. 1-6. (in press)

[2] Zhang, Jiayao, et al. “Grassmannian learning: Embedding geometry awareness in shallow and deep learning.” arXiv preprint arXiv:1808.02229 (2018).

[3] Edelman, A., Arias, T. A., and Smith, S. T. (1998). The geometry of algorithms with orthogonality constraints. SIAM journal on Matrix Analysis and Applications, 20(2), pp. 303-353.

[4] C. A. Beattie, M. Embree, and D. C. Sorensen, “Convergence of polynomial restart Krylov methods for eigenvalue computations,” SIAM review, vol. 47, no. 3, pp. 492–515, 2005.

[5] X. Sun, L. Wang, and J. Feng, “Further Results on the Subspace Distance,” Pattern recognition, vol. 40, no. 1, pp. 328–329, 2007.

[6] K. Ye and L.-H. Lim, “Schubert Varieties and Distances between Subspaces of Different Dimensions,” SIAM Journal on Matrix Analysis and Applications, vol. 37, no. 3, pp. 1176–1197, 2016.

[7] Britannica, The Editors of Encyclopaedia. “Vector space”. Encyclopedia Britannica, 19 May. 2011, https://www.britannica.com/science/vector-space. Accessed 18 October 2021.

[8] Keng, Brian. “Manifolds: A Gentle Introduction”. bjlkeng.github.io/posts/manifolds/. Accessed 18 October 2021.

[9] Bendokat, T., Zimmermann, R., & Absil, P. A. (2020). A Grassmann manifold handbook: Basic geometry and computational aspects. arXiv preprint arXiv:2011.13699.